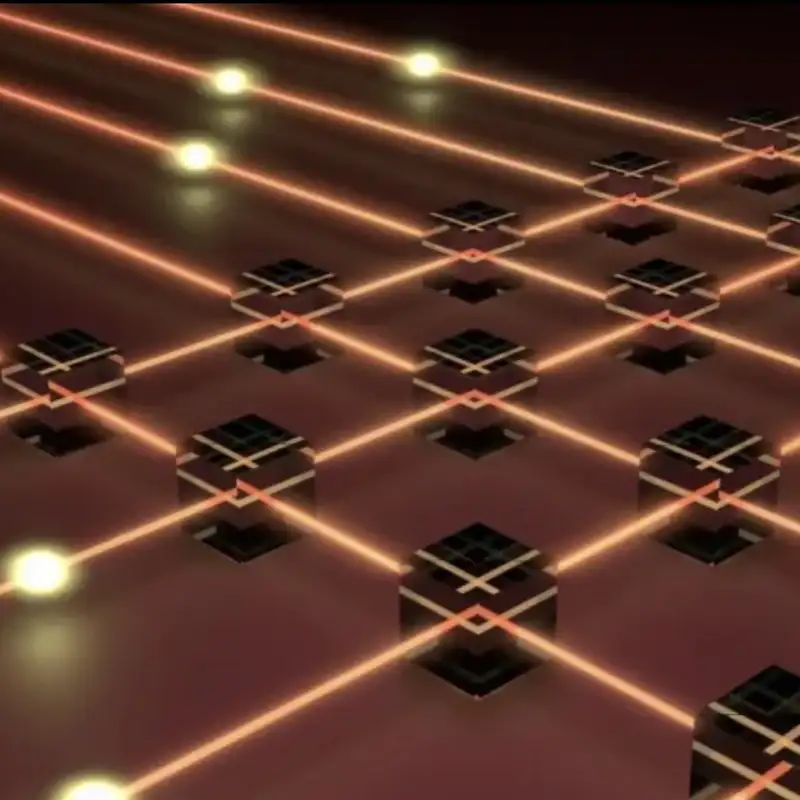

Quantum Network Simulation

Dan: Hello and welcome

to the quantum divide.

This is the podcast that talks about

the literal divide between classical it.

And quantum technology.

And the fact that these two domains.

Are will and need to

become closer together.

We're going to try and focus on.

Networking topics.

Quantum networking actually is

more futuristic than perhaps

the computing element of it.

But we're going to try

and focus on that domain.

But we're bound to experience many

different tangents both in podcast

topics and conversation as we go on.

Enjoy.

Okay, Steve, how are you doing?

Steve: Same old doing.

Bit of a rough voice today, but should

be able to power through for an hour.

Dan: Good.

Good.

No, I'm looking forward

to this discussion.

Simulation to me can mean

so many different things and.

I know simulation in the traditional IT

world covers many different aspects of

virtualization and end-to-end systems.

I think it's probably gonna be quite

different in the quantum networking world.

So why don't we start first of all by just

getting a view on what network simulation

is for Quantum networks specifically.

Steve: Yeah.

For Quantum Networks, simulation

can mean a couple of things.

For me, it's about.

Simulating using software and executing

events in in some sequence, and then

processing those events in some way

so that you can learn particular

aspects of whatever you wanna simulate.

Both physical layer, talking about

how your simulation runs with respect

to a particular fiber loss parameter.

Or a particular noise parameter In

the hardware, there's a lot of aspects

you can simulate using physical layer

and all the other kind of models.

But use this primarily means

writing code that actually processes

events in another direction.

What people sometimes refer to as

simulation is taking a mathematical

model and then applying a mathematical

model to another mathematical model

in order to achieve some kind of.

Analytical equation to come up

with some trends on the plot,

which is also a form of simulation.

So we simulate applying mathematical

models to mathematical models and you

get nice curves that are smooth in most

cases, and they are executed very quickly.

Dan: Okay, let me take a step back for

a second just to compare to the tra the

traditional IT world simulation and.

Virtualized, building a virtualized

network to test a particular thing.

It's evolved significantly over the years.

It used to be quite difficult to do

with the advent of virtualization with

the advent of virtual software images.

These days when you simulate a network,

you are literally taking software versions

of network nodes as they would run in

hardware or in software in production.

And because they all connect

typically via native.

Ethernet connections, which can

be simulated in a virtual switch.

You get a real replica of the network,

with the same software features

that you would have in production.

And it is a carbon copy typically

or at least a cut down version of

the different elements of the network

so you can test particular features.

Obviously it's used for, those types

of things are used for training and

education and simulating tests and

simulating changes that you then want to

implement into production and that kind

of thing with quantum networks, that

the software to run the actual nodes

doesn't really exist in a standard format

or any productized format anywhere.

You still have the same

number of layers to deal with.

I e physical forwarding

of packets or qubits.

And then decision making.

Each node has to have some type of

intelligence in the way that it is

receiving the qubits, sending the

qubits, dealing with the qubits building

entanglement and those kind of things.

But We can't really compare the two, can

we and say that they're similar, right?

They're both forms of simulation, but

the quantum simulation, from what I can

tell there's, it's much more theoretical.

And that's understandable

because of where we are in terms

of market maturity and so on.

Right.

Steve: Yeah that's exactly

how I see it as well.

So like you said, the classical networks,

they have well-defined layers We have

operating systems, they have software

involved with those things, and we're not

really at the stage of classical networks

anymore, where we actually care much about

fiber loss in the channel, or at least

at the simulation stage for the general

population, we're mostly con concerned

about how do we control the network and

upgrade the protocols for communication.

But the physical hardware, that's

a separate class for most people.

But in quantum networks, That's pretty

much all we care about right now is

how does the physical layer affect the

protocol we're trying to implement?

Because we have theoretical ideas on

how to write a protocol that achieves

some property, perfect security, blind

computation, all these things that

require transmission of quantum bits.

But when you introduce noise, then a

lot of things fall apart and we don't

have protocols to accommodate for noise.

So therefore we only care

about the noise we care about.

So how to we care about how to

modify our protocols so that

we can deal with the noise.

So first we need to know how

does noise affect the protocol?

And that's the stage of quantum network.

So we mostly simulate for that property.

And also the layers above the physical

layer are not really well defined

anyway, so the virtualization of

network nodes is not ready yet.

So it might be one company build a

network, virtualizer, second company

build, but those won't have anything

in common because there's no standards.

And so that's too early

I would say for that.

But it's coming.

I think especially for quantum key

distribution, we can probably start

thinking about, this is overlap between

the classical because we're beyond

the point where, We don't understand

what the physical layer does to Q kd.

We're past that stage.

We know what it does, and now

we need to start thinking about

programming the Q KD network.

And that's when this classical an analogy,

just classical software can come in.

Dan: So we can't compare 'em because

they're not really doing the same

thing, even though it's still a network.

Like you said, it really is More

of a physical layer set of models.

And noise.

You mentioned noise.

It's the same with

quantum computing, right?

There's so much noise and we're

still learning how to deal with it.

That, error correction is

improving it's efficacy.

But it's still not good enough

where we can achieve what we call a

logical qubit, which is essentially

errorless and can function reliably.

Quite often the number of logical

qubits you have are smaller

than the physical qubits, right?

Because there's gonna be a

percentage of error rate.

But that's improving over time.

It's probably the same

with the network, right?

Let's.

We can talk about that in a minute.

I think noise is a really good topic

cuz that's, if this is the key of the

way that software is written at the

moment is to learn and plan how to

deal with noise in the network, then

we should definitely focus on that.

But first of all why

do we need simulation?

I mean there's the obvious fact that

hardware doesn't exist yet and that We

can do things like feasibility tests of

the way different nodes interact with

each other, but ultimately when it comes

down to testing the systemic function

of a network the way that the different

nodes interact the the forwarding.

Whether there's entanglement and so

on, isn't it difficult to effectively

simulate all of this in software?

And if that's the case, then isn't there

a huge amount of work that's gonna take

place in simulation, which actually

isn't gonna be useful when it comes

to putting it into physical systems?

Or is the simulation part

of the product development?

Steve: Yeah, for me, Perspective

is simulation is essential.

Definitely for the first reason

you said, we don't have the

hardware to test our protocols.

We don't know if they're gonna work.

So we always have to model these

systems and then, once they're modeled

on paper using mathematical equations,

being able to really interact with

those models in an efficient way,

in my opinion, requires simulation.

And then we can understand how

the hardwares behave when you

interconnect things and put things

together and see how does it work.

In a kind of a, in an engineering setting,

but in a simulated engineering setting.

But the other part, so thinking about

the efficiency of the simulations,

one thing that networks have an

advantage over quantum computing

simulations is a lot of the systems

are much smaller size, so we don't

need to simulate huge entangled states.

So in quantum computing, The

classical limit is about 20 qubits

of entangled quantum states.

Maybe you can get to 40 or something.

It depends on how implementation is

made, but certainly less than something

like 50 in a normal supercomputer, it is

about 50 qubits of entangled states, but

in quantum networks, we're not really

dealing with massive entangled states.

Maybe it's 10 qubits, GHG states.

But even that is rare.

Generally we're just working with bell

pairs, and that's just a small matrix

and many copies of the small matrix.

So you can do much more complicated things

with more independent quantum systems.

So the efficiency is actually not too bad.

That doesn't mean it's perfect.

It is a lot of things that

come with the overhead.

It's not only now do we need to

consider, what does the protocol do.

A lot of the network features

involve timing aspects.

So you also have to think about how

you measure time in the simulation.

That's a big aspect.

And that could be done efficiently

or it can be done inefficiently.

So there's different ways to

do it, depending on what you

need your simulation to do.

Yeah.

And so we can get into the

topic of how time is tracked in

network simulation or quantum.

So it's a quite interesting

topic actually, I think.

So there's two ways that really

we do timing in quantum network

simulation and the primarily used one

is called discrete event simulation.

And the main benefit of discrete event

simulation is you don't have to wait the

amount of time your simulation takes,

if you're in your simulation, takes.

A year, you don't actually

have to wait a year.

You simulate the time so that a year

can pass in a much more efficient way.

Not only that is you can define

your unit of time much more easily.

So you can trigger things to happen

at, whatever unit scale you want.

If you need things to trigger microsecond

units, you can program it to do that.

And then what happens is an

event is triggered and then.

A random amount of time is

programmed to be delayed.

You don't actually wait that much time.

You just, the simulation engine will say

Next event happened some amount of time

later, and this happens very quickly

and your simulation runs very efficient.

On the other hand, there's the real

time event simulation where you lose

the track of when events are triggered

because you're running things based

on how fast your computer can execute.

There you really don't have control

over the timing aspects unless you put

a layer of timing on top, of course.

But in the raw form of real event, real

time event simulation, you're basic

working at the speed of your CPU U.

And there's advantages to that too.

It makes it easier to do things

like distributed simulation.

So if you have a network of

computers working together to

simulate a network, it's much more

easy to develop this kind of thing.

Using real time simulation and yeah, so

both have advantages and disadvantages.

Primarily we're using discretion

advanced simulation because we're,

we just wanna know how does the

physical layer affect the protocol?

But when we're thinking about things

like actually implementing communication

protocols and we don't really think

about the noise in those cases, then

the real time event simulation has an

advantage that you can actually put.

Messages to the internet and

come back down and run things in

a more networky way, let's say.

Dan: Okay, so that covers some of

the kind of elements of the way.

Thinking about time is different when

it comes to quantum network simulation.

What would help me, I think, is

to get a feel for what a simulated

quantum network looks like.

And by that in the traditional world

I may have a number of different

nodes each with their own os

there's connections between them.

There's traffic being sent and routing

protocols between the different nodes.

I understand that we're looking down at

them more of the hardware layer, but what

is it that builds up let's say a generic.

Network simulation.

Okay.

Obviously there are the nodes have some

kind of software, some code running,

but can you give us a view on what

that would look like and what types of

connections they have between each other?

I imagine there's a quantum and a

classical channel of some kind, and

I know that there are methodologies

out there or frameworks so perhaps

that could, we could flow onto that

as the next next topic as well.

Steve: Yeah, I think in general,

most of these frameworks all have

the same core ideas and how they

implement those core ideas is

different depending on the framework.

But there's a lot of overlap

between the frameworks as well.

So generally, you start with having

to define the network topology.

So that means setting up all the

nodes, piece by piece, defining

the connections between the nodes,

and then next step is define the

properties for each of those pieces.

So you say, what's the

loss of the channel?

How much noise does the channel introduce?

How that noise is introduced.

There's a lot of properties,

depending on the components.

Then you can think about things

like, how are the nodes implemented?

What components do the nodes have?

Do they have X hardware,

y hardware included?

So you have to set up all those

pieces before you start writing

the logic of the simulation.

Then that's the next step is to

write the logic of the simulation.

Each node has a functionality.

You program the functionality and then

you assign the functionality to the nodes

that should execute that functionality.

And then you run the simulation and you

wait for some time wait for it to finish.

All the events get triggered.

Then you have some statistics out.

Depends how you program it,

but that's usually how it goes.

Depends on the simulation framework, but

some parts, are more in depth than others.

And the simulation engines that

exist today that are more commonly

used, those are primarily for

discrete event simulation, but there

are also other realtime simulator.

So for me, my favorite one

right now is called net squid.

And Net squid is a discrete event

simulation engine uses qubits

for the information processing

and the information and codings.

Not all of 'em use qubits by the way.

That's an important feature.

You could also do things using

continuous variable systems with

other simulators or different encoding

methods, but nets coded uses a, an

abstract qubit model that it's not really

defined how the qubit is implemented.

It's just a qubit.

Is it a photon?

Is it an electron?

Is it a superconductor somehow it's just

a qubit and it goes through the network.

We don't know what it's made out of.

But it has a lot of features, a lot

of power, a lot of diver diversity.

I don't know, you can do a

lot of cool things with it.

A lot of the modules are already

programmed into the system or

into the engine, and you just Lego

them together and to build your

simulation with all the components.

And then, it's not easy at first.

It takes a lot of learning to figure out

how to use NetCode and do use it properly.

But once you learn it, it's

really powerful and you can

really simulate complex systems.

And then on the other hand, there's a

qim, which is a realtime event simulator

that takes away all the complexity

of network simulation and makes it

as easy as possible to get started.

Qim is more of an educational tool, but

it still has those kind of core properties

where you have to define the topology and

write the logic for each of the nodes.

But you have to wait a little longer

for the simulation to run because

it's running at the speed of the

computer running the simulation.

Yeah, and so there are two others

that I'll mention is the Q K D net

sim, which simulates the layers above

the physical layer, specifically

to determine how to implement a

quantum key distribution network.

So it's gives you the bits

in the classical form.

It has nothing to do

with the physical layer.

You're actually ignoring the

physical layer and the simulator.

But it allows you to implement

the software layer of the quantum

key distribution network and

simulate that part of the network.

And then the last one I'll

mention is called Sequence.

And this I'm not as familiar

with, but as far as I understand,

it's more about the optics.

So looking at the physical components,

More closely than in net squid, and

you're talking about real qubit models.

This is a photons using like time being

encoding or polarization and coatings.

You have photon detectors and all these

really optical components and they're

explicitly defined as optical components.

So it really, at the layer of the

lab, you're basically simulating

an optical table at this point.

There.

It's harder to grasp than squid,

I'd say, because you need to know

what is a single photon source,

what is the photon detector?

How do you encode information

and time being encode photon?

So it's much more involved.

But on the end then you get more accuracy.

You have the realism a lab

essentially in your, on your computer.

But there's, so there's different levels

of difficulty, different levels of

realism and different uses for each one.

Dan: So there isn't one size fits all.

It depends on what you want to simulate.

It sounds like net squid might be

the one that allows you to do all

of the layers, or at least have

better control all of the layers.

But yeah, you said that's got a, it's

more com complex to manage and learn.

Learn.

Let me go onto, Something

that the methodologies do.

You mentioned earlier on simulating noise.

So some of them perhaps CNET sim,

perhaps there is no need for noise

simulation because it's more about

the logical interaction between

qubits and the different components.

That the case?

And what about the other ones

where they are more there's more.

Detailed control over the noise, what

kind of level of things are you tuning?

Is it about th you know, things

in a physical fiber that cause

loss are connector loss, intrinsic

absorption of photons and things.

But is it probably doesn't go

to that physical layer, right?

It's more about x number of photons

are lost between Certain points,

and therefore the nodes have to

be able to deal with that somehow.

Is it, I'm thinking of it

like packet loss, photon loss

Steve: And so the ones that have more

details, so actually each of these network

simulators handle loss in some way.

So each one has their way of handling

noise and loss in, in the network.

And most of them are at the

qubit level except q kd net sim.

So lemme talk about the ones

that do it at the q qubit level.

Dan: Okay.

Steve: generally, the way you would

model this is you have your channel.

In the programming languages, you can

say error, you can set the error model.

An error model acts cubit by qubit.

So it says, when the qubit enters the

channel, apply this error model to it

and apply it with some probability.

And that's really a low level.

So you're looking at really the

bits, the bits in the packet.

It's not like you have packet lost, you

have like bits and the payload are lost.

And there's no way to know, There's no

way to, to read the header, and do a check

sum and say, okay the packet was corrupt.

It's like the qubits arrive

and they're just missing.

Dan: Yeah, you can't read them

Steve: exactly.

So there's like tricks and stuff

in practice, but generally you

don't know that there's a loss.

There's loss, but you don't know

immediately you have to do some

procedure and you might not even know

until you measure the entire system.

Because you might need to think

about the frequency of transmission

and the frequency of detection.

So if those things don't match,

if you're measuring, if you're

sending one per second and you

receive one every two seconds, maybe

some of levels are lost anyway.

But generally it's still

harder to deal with.

. Little harder to understand

loss and how to deal with loss

in quantum networks in general.

But then in simulation, at

least you have the ability to.

Think about those things because

of course in simulation you have

this, god-like ability to understand

exactly which every event that occurs.

With losses and you can

program something to happen.

But in practice, we

don't have that overview.

You don't know when loss is gonna happen.

So that's still like always in,

under the umbrella of physical air

simulation because we don't know

how to deal with that problem.

And then as an exception, there's

the qk d net sim, which says

the packet had X amount of loss.

Do what you want.

The packet still arrived.

There's still something in the payload,

but you now you have to do the processing

in the whatever way you program it to do.

It just, you could set that

loss parameter, but it's not

at the qubit level anymore.

It's just a number.

In the packet originally was

a hundred thousand qubits.

When it arrived, they were a thousand.

What to do, so that's a detected event

and you can program it accordingly.

Dan: So what's, what's what's

the general impact from this loss

in, . These simulated situations be

because you can't necessarily read

the qubits to check that they're

okay as they come into the interface.

Is it a matter of needing to run the

whatever the calculation or processes that

is being run onto the qubits and then if

there are any missing, then it will fail.

And that's why you run certainly on a

quantum computer, multiple shots to get

a better probability spectrum of where.

What the answer is, if you like,

but when you've now got introducing

loss over the network that's gonna

affect the resulting curve, isn't

it, of what the responses look like.

So it's gonna make the, calculation

that's made across a network is going

to be more Error prone basically.

Is that the end result or are

you only looking at the situation

where the calculations are

local to the individual node?

Steve: Yeah, this is tricky.

So this is depends on what

application you're simulating.

So for Q K D loss, generally we

program loss into the simulation to

determine the rate of key distribution.

So we would say, okay, every fifth

qubit is lost, that it decreases

the ability to generate key.

So per transmission, we can transmit on

average, some number of bits, but because

of loss, that number could go down.

So every fifth qubit is lost.

Then we decrease the average

rate of transmission.

Same with entanglement, distribution.

Entanglement doesn't contain any

information, so we're not doing

anything logical, but it with

more loss decreases the rate of.

Entanglement distribution with things

like distributed quantum computing

simulation, then you might start to

think about that statistical curve of how

the measurement results were laid out.

And then noise.

What happens with loss

is you have to retry.

So usually in distributed quantum

computing, you need to establish

entanglement between nodes, and if that

takes longer to do more noises in the

system and you have more noisy outputs.

Yeah, so that's the kind of the reason

we, the primary reason we're doing we're

introducing losses, yet always study

what happens to the physical systems.

And generally, at least the things I

work on is because the primary goal

of condoms right now is to enable

entanglement, distribution, or do Q K

D primarily, we're looking at key rates

or entanglement, distribution rates,

and that's what the loss parameter.

Is basically four.

Dan: Okay.

Let's talk about

simulation of entanglement.

To me it would just mean in a

simulator if something's entangled.

You're just configuring the nodes so that

they re respond once there's a measurement

made on one side in a particular way.

And that if there are errors,

then you lose some of the bell

pairs that are being sent across.

Is it as simple as that or

is there some more complexity

to modeling the entanglement?

Like the rotation of the qubits

and the, in the superposition

state that they're in and so on.

Steve: Yeah, so simulating entanglement,

most of these simulators are simulating

density matrix we basically just model the

qubit using the density matrix formalism,

and then you can see exactly where's

the noise, but there's a little bit more

complexity to the it as well, because.

When you're simulating

entanglements, there's usually

more than one qubit involved.

Yes, there always is more than one qubit

involved in an entangled state, and you

don't want the programs to allow the nodes

to act on qubits that aren't physically

present at their simulated location.

So let's say I'm simulating Alice and Bob,

and Alice generates two qubits, entangles

them and sends one of those qubits to Bob.

The simulation engine should

prevent Alice from acting on

Bob's qubit it's impossible.

If she doesn't have access

to it, it should block that.

So there's a bit of a layer of

not only what is the qubit state,

but where is that qubit also, who

owns that qubit and where is it?

Sorry, where is the qubit and

what's the state, let's say?

And yeah.

So we have to make sure in the

simulations that we're not cheating.

We're cheating always in simulation,

but not cheating to an extent

that this can never be done.

Alice can never act on Bob's cubit

if they're not close to each other.

Dan: Yeah, the results need

to be meaningful, right?

And if there are steps which are

taken, which are impossible in a

physical deployment, when and if that's

possible to replicate what's being

done in the simulation, then it needs

to be, it needs to be meaningful.

So it needs to be as

realistic as possible.

You mentioned density matrix, so

I understand that to be a matrix,

i e a mathematical matrix that

describes the quantum state.

Of the system, is that per qubit

or is it for the whole system?

In which case that would cover

multiple qubits and matrices obviously

can get very big in that case.

Steve: Yeah, this is the, this was the,

one of the key advantages of network

simulation is it's generally per cubit

until entanglement is created, then you

need to expand the density matrix, but.

Network simulation.

We don't deal with massive

entangled states like I was saying.

So therefore you, it is pure, it's pure

system, let's say pure entangled system,

but not all of the system at once.

I think that would be very that would

make it probably impossible because

you, in simulations, you're generating

thousands of qubits, maybe millions

of qubits in a simulated fashion.

It could be that they

all exist at once and.

Then you have no way to, to simulate

that, but you can easily simulate

1,000,002 by two matrices, and

those would represent a cubit or

four by four, which is a bell pair.

Dan: Yeah.

Yeah.

Okay.

Fascinating.

Steve: yeah, that's one big

advantage for network simulation.

It's easier to achieve somehow.

Dan: Okay.

Did, do you answer my question about

the simulation of entanglement?

I think so.

If you lose One part of if Bob's

part of the entangled pair is lost on

route, then the simulator also needs

to take that into account, right?

So that if Alice makes a measurement

then it doesn't have any impact to

anything that, that Bob is holding.

And Bob can't make the the measurement

on the supposed qubit that would've

arrived had there not been lost.

And I think we're too early on to have

any you actually h how, in that scenario

how would de node that Bob has Tell

Alice that it, they didn't receive the

other end of the entangled pair is there

some bidirectional information flow

there, maybe over the classical channel

to there needs to be some kind of.

Tagging of qubits or some

identification of qubits.

Is that stuff simulated at

this point in time as well?

Steve: So then, yeah, so what's

interesting is this is exactly what

needs to be developed to perform

entanglement distribution in reality.

So how does Bob act

when the qubit is lost?

How do they, how does Bob even

know that the qubit was lost?

So those things actually come

down to realistic things,

that's no longer simulation.

We still have to write the protocols

in order to deal with that.

When the cubit is lost in

simulation, it's in practice,

in the scope of the simulation.

It's also lost.

It means Bob can't access it, Alice can't

access it, and the simulation should

determine that none of the parties can

access it and they should prevent it from

happening in, in the simulation engine.

Then the question is,

how does Bob respond?

Maybe he's waiting x amount of

seconds before the cubit arrives.

If he detects nothing, he will

send a message back to Alice.

But that's the protocol, that's what

the instructions has to be programmed.

And it's up to the author of

the simulator to write those

instructions and handle laws.

So what someone might do is they might

come up with a protocol for what to

do when the entanglement pair is lost.

And their response is, their

coordination approach is much

better than someone else's approach.

And then you write a paper and

you show, my attainment rate.

With this loss parameter is

two fi two factor, better than

what pre previously was stated.

So it's, it comes down to what's

the protocol, what's the idea

and what to do with this loss.

So the simulator just gives you

the fact that there is loss.

How you respond to loss is

up to the creative author.

So the creativity of the office.

Dan: It seems total chaos at the moment.

Like in terms of the, in, in anything

that comes out in a paper like that

the amount of variables in the system

how it's developed, the measurements

they're taking, the rate, the compute

capabilities they've got the mathematical

equations underpinning it or.

All of these things are variable,

so it's incredibly hard to when you

see something like that, that and

you do see, a paper on some kind of

incremental improvement on a previous

behavior or a new type of outcome.

You've got to almost take people's

word for it because the complexity

behind it is so difficult.

Unless you're a PhD in in physics

and have been studying this for a

long time it might be easier for

you than to me, I would think.

But is there still a level of assuming

that correlations and things are all.

Relevant.

And statements on

improvements are factual.

Steve: Yeah, this is interesting topic.

So usually when you have a paper that

is about, the results are based on a

simulation, usually what it looks like is

there's a protocol written explicitly so

you can read exactly what the instructions

are, and then the results are, we

programmed those instructions into the

simulation and then ran the simulation.

And this is what came out.

I don't like when, and I'm also guilty of

this, so I should, be careful with my own

word when there's the papers like that.

And I have papers like that where

the simulation code is not public,

and there's multiple reasons

why that could not be public.

For example, when you work at a company

like I do, you have to go through some

hoops to get your code open source.

It's not always difficult, but

it's also not always trivial.

It's not always worth

the time of doing it.

And actually many papers, they, they have

this flow where the code is not available.

Can you actually.

Validate, can I go and read the code

to see that this is the protocol

that was implemented and these

are the results that were out?

Or can I just go on, generate this trend

using some plotting library, and then I

just copy and paste the co copy and paste

the chart and put it in my paper and say,

look, it's better than state of the art.

So there's always, there is some level

of trust where you say, I believe that

these authors went and wrote the code,

and they wrote it correctly, bug free,

and now you know that's the result

and it's better than what was before.

don't like it.

I think every simulation paper should

have the code explicit so anyone

can go and verify that the results

are what they're supposed to be.

Dan: But even then who's going to

check all the different simulators to

check the, the algorithms the code the

processes in the code and so on The

methodology people write code differently.

And they might have the same outcome,

but the code is very different to read.

It's almost like there, there needs to be

some kind of standard simulator framework.

I mean you mentioned these, the frameworks

earlier on that squid you sim and so on.

Then I guess they're

all different as well.

And in a lot of cases we're talking about.

A simulator where the researcher

has written it themselves rather

than used a public framework.

Is there enough in these simulation

frameworks that are open source or

close to open source that can help

solve that issue where there's a big

chasm between knowing whether you

are comparing apples and apples, when

looking at different simulation outcomes.

Steve: At the moment, there's not really.

Such a way that you can kinda

standardize the simulations.

But with one exception, I think

net squid is, at least it was

putting in this direction.

I don't know what's the status

of, they're still working on this,

but they have this idea of modules

of, or plugins, for example.

So if you want to build your own

entanglement distribution protocol,

you can define that as a plugin, put it

into the net squid framework, and then.

Other people can use it as well.

So if everyone is sharing the same

modules and then improving on a

protocol, can almost trust that a bit

more because other people have used it.

It's been through some review.

It's not the first time someone

uses it and it's open source

and everyone can read the code.

So I like that idea, like being able to

take other people's work, put it into your

own work, play around with it, modify it.

And then say look, it's just a

epsilon difference moving before,

but it's this much improvement

in the protocol that changed so

much code from the previous works.

It's a little bit of a smaller step than

writing your own simulation framework

and then running your simulations on

that framework and then saying, look, I

have these nice crafts, and it's pretty

common with quantum because the tools

are hard to use, but it's sometimes

easier just to write your own framework

to do exactly what you need it to do.

Because you know how to do it instead

of spending like two weeks or a

month learning another framework.

It's a rough area.

I think it's still something

that needs to be improved.

Dan: Yeah so many unknowns.

And questions when it comes to

evaluating what gets put out there.

Interesting.

It's gonna be interesting to

see how it evolves over time.

Steve: But if you look towards the

quantum computing, I think it probably

started off in that stage as well.

But as the engines got more

powerful, like Kiki, there's

probably over a thousand papers

written based on Kiki at this point.

And I think what step needs to

be taken is someone has to right

kikid for quantum networks.

There has to be a powerful

simulation engine well supported.

Lots of effort put in, make it

look as good as possible, easy to

use documentation that will get

people attracted to the simulation

framework, and that's what we need.

So right now things are half

dead, not maintained, bugs not of

documentation, too hard to use.

That's quantum network simulation.

So it's it's not an a

peaceful environment.

His kid, on the other hand,

is so well developed, so much.

Involvement, community, everything.

That's how we need quantum

network simulation to be, I think.

Dan: Yeah.

Awesome.

So is it worth I wanted to ask

about repeaters and I'm not sure

where, I don't want to go into

the topic of what a repeater is.

It's a node that is Basically used to

extend the link length of connecting

multiple nodes but using entanglement,

and let's not go into the detail

of how it works, but when it comes

to simulation is there anything

special about simulating a repeater?

Have you seen any have you seen

anything in any of those frameworks

about simulating repeaters?

Or are we not there yet?

Steve: I would say, actually, I would

say simulations of quantum repeaters

is probably 50% of the papers that

come out doing that effectively.

Dan: All right.

Steve: because it's a combination though.

It's a two, two directional

to the same path.

So simulation of a quantum repeater

is done for analyzing how well an

entanglement distribution protocol is.

Using quantum repeaters.

And then on the other hand is the

physical aspects of the quantum repeater.

How is the performance of it, depending

on the memory or the detection,

efficiency, all kinds of things.

So that's, it's really popular to simulate

quantum repeaters because it's the core

of almost everything at quantum internet.

Yeah there's.

I can think of a few papers that

have like high impact that are based

on simulation of quantum repeaters.

Dan: Yeah we'll put those

in the show notes, right?

I think that'd be good to include

that in the show notes for

people to read as a follow up.

Now, one thing you've mentioned

multiple times is entanglement

distribution protocol.

Again, the same question around

standardization, or I probably

shouldn't use that word.

I would say conformity of the way people

modeling entanglement, distribution

protocol, and it's probably different

across the, each of the frameworks.

What it, what are your views

on what needs to be defined

there to make it common again?

It is it part of the Kiki for

networks that you mentioned?

IBM aren't paying us for

this, but it would be.

they could sponsor us next time,

the amount of times to mention it.

Yeah.

So could you do, could you talk a bit more

about entanglement distribution protocol?

I know it's a core part of the way the

repeaters and the nodes need to work.

It's almost like the IP I see it of

traditional IP routed networking.

The IP needs to work.

Effectively end-to-end, and the

nodes that are on the network need to

interoperate up and down the stack and

be able to communicate in a set way.

And I guess that's what's

required here, isn't it?

Because the, once the entangled

pairs are set up, then that then

allows you to do the higher order

operations using that functionality.

Steve: Yeah, so it's the way qubits

are transmitted are using this

entanglement, distribution teleportation

with the repeaters to form long

distance entanglement connections.

But the way that entanglement

is distributed, in practice we

can think of, okay, a cubit is

generated, another one is generated.

We entangle them, we send

half to second person.

They have the everything could be done in

this noiseless fashion and in simulation

you could cheat on so many things.

For example, the classical messaging

involved for producing an entangled pair

is almost always ignored in simulation.

So what messages do Allison Bob need to

say that they're reliably agreeing That

the entangled pair, that Alice, half

of the entangled pair that Alice sent

to Bob is the same one that was sent

and it's there and it's ready to use.

In simulation you could ignore all of

that messaging and you just perform your

operations cuz it's an event and you can

use your god-like ability of simulation to

de determine that those events happened.

So my point there is this the protocol,

the integrity distribution protocol

includes those classical messaging parts.

And that part is what I, the,

that's what I call the entanglement

distribution protocol includes both.

The transmission of the quantum me

states, plus the classical messaging

on top, that can allow Alice and Bob

to know, yes, those entanglement units

are there and they're ready to operate.

Feel free to send me the

next message kind of thing.

So

Dan: Yeah.

Steve: like a handshake.

Dan: And who's working on that?

It sounds like in all the simulators,

they're ignoring it because perhaps it

hasn't been standardized and it's too

complex to, to create your own version.

Why bother?

Just assume that it works and therefore

the user of the simulator can start to

think about the more higher order stuff.

But in the real world, none of

that's gonna work until there's

a stable ability to do this.

To distribute the entangled

pairs and manage them right.

Steve: Exactly.

So it's like finding

the best case scenario.

That's likely impossible, but still

is the best case scenario, non-zero.

That's the goal.

If I do this and I don't even

need classical messaging yet,

can I achieve my protocol?

At all.

And then you, it only gets worse

as you add classical messaging.

The performance only degrades.

So I think that's why it's

still meaningful, because you

thinking is it even possible?

I.

In some aspect, and then you make

it harder for yourself by adding

noise, by adding classical messaging,

and it just gets worse and worse.

But you need to raise the bar

quite high before you can bring

it down to the realistic level

and still be off the ground,

Dan: I think what we're highlighting here

is that there's so many areas that need

that need to evolve for an end-to-end

system, for a quantum internet to exist

right in, in talking about simulation

like this, it's quite eyeopening.

To highlight the gaps.

Certainly been useful for me

to think about them with you,

so thanks Steve, as usual.

Is there anything else you

wanted to add to tag on the end?

I guess we could always

come back to simulation

In the future as things evolve,

if there's any big jumps in what

code and software is out there and

developments people are making.

Thanks so much.

It's a fascinating topic with so

many unknowns and lots of ambiguity.

Steve: Yep.

Thanks.

Great chat as always.

We'll catch you next time.

Dan: I'd like to take this moment to

thank you for listening to the podcast.

Quantum networking is such a broad domain

especially considering the breadth of

quantum physics and quantum computing all

as an undercurrent easily to get sucked

into So much is still in the research

realm which can make it really tough for

a curious it guy to know where to start.

So hit subscribe or follow me on your

podcast platform and I'll do my best

to bring you more prevalent topics

in the world of quantum networking.

Spread the word.

It would really help us out.

Creators and Guests